Introduction

I'm a JBoss user and so I tend to develop against and deploy on a JBoss server (JBoss 7.1 being awesome to the max).But you know, sometimes I look at what I have and I think to myself: do I really *need* to apply all those layers of complexity just to solve a simple problem? Think about EJBs; powerful stuff, but is it really necessary to apply it when you are building a simple webapp? Even if JEE6 makes it simple by allowing you to deploy EJBs in the war, I still don't think so. Do you really need container managed stuff? Other than a datasource: not really.

In stead of plowing ahead and bolting on everything that other smart people come up with, sometimes it is just a good thing to take a step back and return to the basics. I want to create a webapp, a simple war, do some simple JPA/Hibernate 4 database stuff and create some pages using JSF 2.1 and Primefaces (or whatever component library you fancy, if any). And I want to (hot-)deploy it on the simplest and in my opinion most stable Java servlet container of all - Apache Tomcat 7. Aha but I'm still a power user. I also want to use Maven and Eclipse. The plot thickens. Lets see what we can do...

Please be aware that I'm not aiming here to teach you how to create web applications; that is a subject that filled many books. I am not even going to make a weak attempt at it either, although I do my best to fill in as many blanks as possible. I assume you already know your stuff when it comes to web development, JSF, persistence and Maven. The main focus of this article is to bring everything together into a neat, manageable package. To do that I'm splitting the article up in three sections. First of all is the setup of tools, then we are going to get JSF up and running and something hot-deploying, finally we're going to add the database layer.

Setup tools

Of course you need to download and install Tomcat 7 and you need Eclipse. For both, download the latest releases. I'm assuming you are using at least Eclipse 3.7 SR1. We are going to be controlling Tomcat from within Eclipse, so no need to install it as a Windows service (assuming you are doing this on Windows). Just get the zip, unpack it and be almost done.To communicate with a database you need a JDBC driver; Java databases 101. It is Tomcat that will be managing the connection pool for us, so it is Tomcat that needs access to the driver. You cannot simply deploy it with your application, it needs to be on the server classpath. To do this, put the JDBC driver in the lib subdirectory of the Tomcat installation; easy and simple. In the examples I am using HSQLDB.

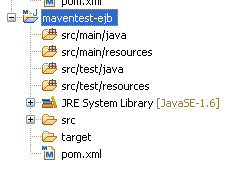

Since I'm a Maven dude, that is what I'll document. All you need to do is create a simple Maven project. No need for a multi-module setup, just create a single project of type 'war'. After creation there is always the dreaded dependency setup. For now lets add the bare minimum. For more help with Maven, my article on Maven might help. Also, my Article on using Maven from Eclipse.

<dependencies>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>1.6.4</version>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.14</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.6.4</version>

</dependency>

This solves our logging needs. I use log4j which is perhaps a bit outdated; you could also simply use java.util.logging (JUL) and save yourself a dependency. I've tried to do that myself but I've had no success so far configuring that properly so I get something as simple as a dedicated log file for my own applications.

<dependency>

<groupId>org.apache.tomcat</groupId>

<artifactId>tomcat-servlet-api</artifactId>

<version>7.0.54</version>

<scope>provided</scope>

</dependency>

</dependencies>

These dependency allows us to compile servlet 3.0 code. Note that the servlet-api dependency is provided, as Tomcat itself already provides it for us of course.

The above dependencies will likely be the same for all web projects you do. Later we are going to add a couple more to deal with JSF and JPA. For now, lets wrap up with basic plugin configuration:

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<inherited>true</inherited>

<configuration>

<source>1.7</source>

<target>1.7</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<configuration>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

</plugins>

</build>

At this point you will want to refresh your project settings (right click project -> maven -> update project) so that the Eclipse settings match the Maven settings (Java 7 being used). You could of course also specify Java 1.8 if you're going to be running on Java 8.

RECAP: At this point you have

- installed Tomcat 7 or higher and Eclipse 3.7 or higher

- added a JDBC driver to tomcat/lib

- created an Eclipse workspace, a maven project with war packaging and added the above into the pom

- did you check the build path, especially if there are exclusions on the resources directories? There likely are - remove those exclusions or hot-deployment is not going to work right.

- during the setup stages, continuously examine the deployment assembly; this can turn into a mess when using a mavenized project for some reason

Config files

To properly setup the application based on the choices I've made we need a pile of configuration files. Lets tackle them one at a time.webapp/WEB-INF/web.xml

Main configuration file for a java webapp. Right now its quite bare, we'll be adding stuff to it later.

<?xml version="1.0" encoding="UTF-8"?>

<web-app xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_3_0.xsd"

version="3.0">

<display-name>testapp</display-name>

</web-app>

Notice the servlet spec 3.0; Tomcat 7 provides that for us. If you would be targetting Tomcat 6 for some reason, you'd need to downgrade to servlet 2.5 (and Java 6).

resources/log4j.xml

To make log4j happy, we need to define some minimal configuration.

<?xml version="1.0" encoding="UTF-8" ?>

<log4j:configuration xmlns:log4j="http://jakarta.apache.org/log4j/">

<appender name="ASYNC" class="org.apache.log4j.AsyncAppender">

<appender-ref ref="CONSOLE"/>

</appender>

<appender name="CONSOLE" class="org.apache.log4j.ConsoleAppender">

<layout class="org.apache.log4j.PatternLayout">

<param name="ConversionPattern" value="%-d: %-5p [%8c](%F:%L) %x - %m%n"/>

</layout>

</appender>

<category name="org.hibernate">

<priority value="error"/>

</category>

<category name="com.yourpackage">

<priority value="debug"/>

</category>

<root>

<priority value="INFO" />

<appender-ref ref="ASYNC"/>

</root>

</log4j:configuration>

In the above config, replace 'com.yourpackage' with the root package of your own application. With the Hibernate filter in place the noise logging should be minimal. Note that this is aimed at development; when deploying to an actual server outside of your IDE you should add file logging to it. I refer to the log4j manual for more information.

That's it for the basic setup.

TO RECAP: at this point you will have the following files in your Mavenized project.

src/main/webapp/WEB-INF/web.xml

src/main/resources/log4j.xml

For the people who don't use Maven, there will be a summary of deployment setup at the end of the article.

Setting up hot-deployment

Before we get on to adding JSF and JPA to our little test application, you might want to go through the hot-deployment setup first. To make sure it works, lets add a very basic index.html to our webapp.

<html>

<body>

<h1>

Hello world</h1>

Hot deployment seems to be working.

</body>

</html>

Now it is time to take some snapshots and show how to setup Eclipse.First of all, we will want to create a server runtime. Choose window, preferences. In there, unfold Server and select Server runtimes. This is likely a virgin workspace, so no server runtimes will be there. Click Add to add a new one.

Simply select Apache Tomcat 7 and click next. In the next dialog, select the correct directory where you installed Tomcat 7. Click Finish to create the server runtime.

Not there yet. Close the preferences and open the servers view (window, show view, other and in the dialog select server and then servers). In the servers view, right click and select new, server.

Make sure that Tomcat 7 is again selected; Eclipse will automatically setup the runtime you just created. Click next. In the resulting dialog, make sure that your webapp is in the configured list so it will be deployed to the server. Then click finish to create the server instance; you'll notice it in the servers view and you'll also notice that your application is going to be published to the server. Hurray!

Depending on the version of Java you use, it might be a good idea to give Tomcat some more heap space; you can do that by double clicking on the server instance in the server view; an editor window opens up where you can provide fine-grained configuration for the instance. One such configuration is the launch configuration, which you can change by clicking Open launch configuration.

In the launch configuration, select the arguments tab which shows all the java properties being set. We are going to add a new one, -Xmx512m.

That gives the server a lot more breathing space should it be necessary; you can also put a lower value; during development you don't want to give yourself too much breathing space. The higher the value you set, the less chance you'll have of catching heap space related problems during development. Note that in the arguments, take good note of the wtp.deploy property being set in the launch configuration.

At this point you're ready to try it! You can try publishing the application. This will make sure that the application is deployed to the server. Of course you want to check that Eclipse is doing it right, so open up the tomcat/webapps directory and... what the heck? Nothing is deployed there!

Remember that I told you to take note of that property in the launch configuration. That directory is where Eclipse deploys the application in fact. It is going to be a path like: workspace/.metadata/.plugins/org.eclipse.wst.server.core/tmp0/wtpwebapps. Here you'll find the deployed files. Don't meddle with these yourself, whatever you want to do: do it from Eclipse or else the published state in Eclipse may go out of sync with the filesystem. Nothing that a good clean of the project cannot fix though. In case of trouble it is good to know the location of these files so you can check just what the heck Eclipse deployed and what it didn't.

Change the deployment location

You may want Eclipse to deploy the files to a somewhat less hard to find location; I certainly do. We can configure it, but before Eclipse will allow you to to that you will need to remove your project from the list of deployables under your server instance. Right click the project name and remove it. Then, publish the server. At this point when you double click the server instance, you will be allowed to make changes to the deployment location (note: when I tried this on Eclipse Luna, I did in fact not need to remove the application from the server first).This selection will move the deployment directory into the tomcat installation directory; much more manageable. You can choose to use the webapps folder, but you may want to just keep the one that Eclipse picked for you; it makes no difference. One thing you have to be aware of: Eclipse will change the server configuration to make this work (backups are created). That is a bit unfortunate as you can't re-use the same Tomcat instance to deploy multiple applications to. I would create a unique copy of Tomcat per application you're developing concurrently.

Whatever the case, after changing the directory you will want to make your application deployable again. You do this by simply dragging the project node in your package explorer to the server instance in the servers view. The project will reappear in the list of deployables.

Fixing the deployment settings

We are going to return to the server view one more time, this time to not look at the server settings but at the settings of the application we're deploying. Double click on the server (make sure the application is going to be deployed to it) and then click on that Modules tab hidden away at the bottom.Here we can change two things;

- The context name; this influence the URL under which we will be able to reach our application on our development localhost environment

- If the webapp is reloadable or not

As said, Eclipse takes over the Tomcat installation and these are the files it manages for your benefit. One of these files is of interest to us at this point: the server.xml file. Open it up. If you scroll all the way to the bottom you'll find an XML element called <Context> which contains exactly the information we just input in the server dialog. Now you know where to look to validate that your settings will be correct when you start the server.

I repeat: reloadable should be false at this point.

There just has to be something wrong

Getting a project to hot-deploy properly can be a bit fiddly. Recurring problems I have is that Eclipse wants to hot-deploy test resources and wants to deploy web resources to both the root and the classes folder for some reason. When things don't go right, do a few basic things.- make sure the Eclipse project is synced with the Maven poms (m2eclipse tends to notify you when the project is not through a build error)

- clean the project and do a manual full publish

- check the build path to make sure there are no exclusions

- check the deployment assembly; make sure no test resources are deployed and that all the elements point to the proper deployment path (and exist only once!)

- perform a clean on the server instance

Note that making changes to the build path tends to also result in automatic changes being made to the deployment assembly; make sure the two are always synced.

If all else fails, check, check and triple check all configuration files, the Maven poms, the Eclipse facets, the deployment assembly and the build path settings.Whatever problem you have, it originates from the build path and the deployment assembly, but the problem might start in a resource which influences those elements (such as a pom). Whatever the problem, use the deployed application as a guideline to figure out what is wrong. Check the files that Eclipse has copied to the Tomcat web application directory (you should have configured which one that is by now) and see what files are there. Which ones are missing? Which ones are in the wrong place? Which ones should not be there? The Tomcat documentation can guide you.

Start the server

At this point, click on the debug icon to start the server. Like when using JBoss, you can easily debug and hot-swap your code when deploying to Tomcat. Tomcat should output some basic logging to the Eclipse console, which is nearly empty right now because we're not deploying much yet. At this point, you can navigate to http://localhost:8080/testapp (or whatever context name you configured earlier) and our basic index page should pop up. Now we're ready to bolt on more stuff, beginning with JSF.Setting up JSF 2

To work with JSF 2 you need to add only one dependency to your Maven pom:

<dependency>

<groupId>org.glassfish</groupId>

<artifactId>javax.faces</artifactId>

<version>2.1.29</version>

</dependency>

Not provided, we're deploying this baby with our webapp because its Tomcat, which does not provide JSF out of the box. When you're deploying on a full JEE application server you will

want to mark this dependency as provided because JSF 2.X is already going to be provided by the container itself.

Plain JSF is likely not enough for you though, you will probably want to add one of the extension frameworks. In my case, I add Primefaces.

<dependency>

<groupId>org.primefaces</groupId>

<artifactId>primefaces</artifactId>

<version>4.0</version>

</dependency>

Primefaces is nice and simple yet powerful and feature rich, and since version 4 it is also available through Maven Central so you do not need any external repository anymore.JSF 2 - web.xml changes

JSF needs some basic setup in the web.xml so that it can start servicing our Facelets XHTML pages:

<context-param>

<param-name>javax.faces.INTERPRET_EMPTY_STRING_SUBMITTED_VALUES_AS_NULL</param-name>

<param-value>true</param-value>

</context-param>

<servlet>

<servlet-name>Faces Servlet</servlet-name>

<servlet-class>javax.faces.webapp.FacesServlet</servlet-class>

<load-on-startup>1</load-on-startup>

</servlet>

<servlet-mapping>

<servlet-name>Faces Servlet</servlet-name>

<url-pattern>*.xhtml</url-pattern>

</servlet-mapping>

<context-param>

<param-name>javax.faces.PROJECT_STAGE</param-name>

<param-value>Development</param-value>

</context-param>

<!-- primefaces -->

<!-- If you want to use a different theme, configure it like this

<context-param>

<param-name>primefaces.THEME</param-name>

<param-value>aristo</param-value>

</context-param>

-->

This will setup the facelets servlet to process .xhtml files only. Note the VALUES_AS_NULL property. This setting will force that submitted values that are empty do not become empty strings but in stead are null. Lo and behold when you try that on Tomcat, it seems like the setting isn't doing anything! That's because Tomcat has a special EL implementation that also needs to be configured. We need to add another startup parameter to the server launch configuration (remember? Where you set the maximum heap space):-Dorg.apache.el.parser.COERCE_TO_ZERO=false

The combination of those two setup steps is what makes it happen under Tomcat 7.

JSF 2 - webapp/WEB-INF/faces-config.xml file

This file has become mostly obsolete with JSF 2 and up, but it is still best to provide it even if it is empty. Some servers keep an eye on it to know when something needs to be redeployed - Tomcat is one of them. And you still need it if you need to setup very specific navigation rules.

<?xml version="1.0" encoding="UTF-8"?>

<faces-config

xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-facesconfig_2_1.xsd"

version="2.1">

</faces-config>

JSF 2 - getting something to deploy

That's the setup you need to get to work with JSF. To prove that it works we need some basic code. A very basic facelets template (put it in the webapps folder of your project):

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/1999/xhtml"

xmlns:h="http://java.sun.com/jsf/html"

xmlns:f="http://java.sun.com/jsf/core"

xmlns:ui="http://java.sun.com/jsf/facelets"

xmlns:p="http://primefaces.org/ui">

<h:head>

<title>Testapp</title>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8" />

</h:head>

<h:body>

<h:outputStylesheet target="head" library="css" name="main.css" />

<div id="content">

<div id="maincontent">

<ui:insert name="content">Page body here</ui:insert>

</div>

</div>

</h:body>

</html>

One thing you may notice (if you have been using JSF for longer than today) is that the outputStylesheet is in the body tag, not the head tag. This is because I added an extension framework (Primefaces) into the mix, which adds its own stylesheets. By putting the outputStylesheet in the body tag, this enforces that your own stylesheets are always added last to the page, allowing you to override styles of the framework should you be so inclined.

This uses JSF 2 resource management; save the main.css file as webapp/resources/css/main.css so JSF can find it.

Finally, lets define an index.xhtml file:

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<ui:composition xmlns="http://www.w3.org/1999/xhtml"

xmlns:h="http://java.sun.com/jsf/html"

xmlns:f="http://java.sun.com/jsf/core"

xmlns:p="http://primefaces.org/ui"

xmlns:ui="http://java.sun.com/jsf/facelets"

template="template.xhtml">

<ui:define name="content">

#{index.hello}

</ui:define>

</ui:composition>

And we need a backing bean to go with that, just to be sure that it all works:

@ManagedBean

public class Index {

public Index(){

}

public String getHello(){

return "hello";

}

}

At this point you should remove the index.html file that you created earlier, our new index.xhtml replaces it. Check that all the new files are deployed (and the index.html you removed is no longer

deployed) and then (re)start the server. At this point the startup logging should provide a hint that JSF is in fact present:

Initializing Mojarra 2.1.29 (SNAPSHOT 20120206) for context '/testapp'

Good, JSF is initializing things. This line tells you under what context name Eclipse is deploying the application which should be the context name you configured earlier. At this point navigate to http://localhost:8080/testapp and if everything is correct, you'll be greeted by the hello message from the backing bean.

TO RECAP: at this point you have the following in your project:

src/main/webapp/WEB-INF/web.xml (modified)

src/main/webapp/WEB-INF/faces-config.xml

src/main/webapp/index.xhtml

src/main/webapp/template.xhtml

src/main/webapp/resources/css/main.css

src/main/resources/log4j.xml

JPA 2 - setup

To be able to use JPA in our webapp we'll need to add a persistence provider to our maven pom. We'll be using Hibernate.

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-core</artifactId>

<version>4.3.1.Final</version>

</dependency>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-entitymanager</artifactId>

<version>4.3.1.Final</version>

</dependency>

This adds not only Hibernate 4.3 (check what the latest version is though) to the dependencies, but also the JPA API. Oracle does not provide an API dependency for JPA 2 (at the time of writing at least), but luckily most persistence providers do provide their own. Hibernate is not in Maven Central, you'll need to add the JBoss Nexus to your Maven configuration. My Maven article has more details on that.

JPA 2 - datasource setup

Before JPA can do anything, we need to provide a datasource. For testing purposes you don't need to install a real database, you can setup an in-memory one like H2 or HSQL. Because it is my old friend I'll be using HSQL here, but you can easily substitute it for H2 if you so please. First of all we need to add a datasource to our development setup. To do that we are going to modify another file that Eclipse is managing for us: the context.xml file (remember: its in the Servers project that Eclipse created when we setup Tomcat):

<Context>

<Resource name="jdbc/TestappDS" auth="Container" type="javax.sql.DataSource"

driverClassName="org.hsqldb.jdbcDriver" url="jdbc:hsqldb:mem:testapp"

username="sa" password="" maxActive="20" maxIdle="10" maxWait="-1" />

...

</Context>

Here we define a datasource/connection pool "jdbc/TestappDS", which is a simple HSQLDB in memory database. Tomcat is going to manage this datasource for us, so put the hsqldb driver jar in the tomcat/lib folder; I'll make that 100% specific: so it is NOT enough to add the JDBC driver jar to your web application, Tomcat itself needs access to it hence it goes in the Tomcat library folder. The above configuration is still not enough, we also need to hook into into our web.xml file to actually make the datasource available to our application:

<!-- tomcat -->

<resource-ref>

<description>DB Connection</description>

<res-ref-name>jdbc/TestappDS</res-ref-name>

<res-type>javax.sql.DataSource</res-type>

<res-auth>Container</res-auth>

</resource-ref>

JPA 2 - resources/META-INF/persistence.xml file

Now that we have our datasource we can setup JPA and Hibernate. To make it nice and confusing, this file does not go into the META-INF folder of your webapp - it goes into a META-INF folder that is on the classpath. In maven terms that means that you create the file in src/main/resources/META-INF/persistence.xml so it ends up in webapp/classes/META-INF/persistence.xml.

<?xml version="1.0" encoding="UTF-8"?>

<persistence xmlns="http://java.sun.com/xml/ns/persistence"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/persistence

http://java.sun.com/xml/ns/persistence/persistence_2_0.xsd" version="2.0">

<persistence-unit name="testapp" transaction-type="RESOURCE_LOCAL">

<provider>org.hibernate.ejb.HibernatePersistence</provider>

<non-jta-data-source>java:/comp/env/jdbc/TestappDS</non-jta-data-source>

<properties>

<property name="hibernate.cache.provider_class" value="org.hibernate.cache.HashtableCacheProvider" />

<property name="hibernate.format_sql" value="true" />

<property name="hibernate.show_sql" value="false" />

<property name="hibernate.jdbc.batch_size" value="20" />

<property name="hibernate.cache.use_query_cache" value="false" />

<property name="hibernate.hbm2ddl.auto" value="update" />

<property name="hibernate.cache.use_second_level_cache" value="false"/>

</properties>

</persistence-unit>

</persistence>

Tomcat is not a JEE container, so we are not using JTA or container managed transactions. In stead it is a simple RESOURCE_LOCAL setup. Fortunately, we don't need to declare any entities here; they are still discovered during the setup of the EntityManagerFactory. Hibernate is setup here to auto-generate the database from the entities, which is the most convenient way to work with an in-memory database.

Also note the JNDI name of the datasource. That is as it is documented in the Tomcat datasource HOW-TO.

TO RECAP: right now you'll have the following in your project:

Servers/context.xml (modified)

src/main/webapp/WEB-INF/web.xml (modified)

src/main/webapp/WEB-INF/faces-config.xml

src/main/webapp/index.xhtml

src/main/webapp/template.xhtml

src/main/webapp/resources/css/main.css

src/main/resources/META-INF/persistence.xml

src/main/resources/log4j.xml

And you put a JDBC driver jar of whatever database you are going to use in the tomcat/lib folder.

It might be interesting for people who do not use Maven to know where all the configuration files need to go in the war:

| What | Where |

|---|---|

| web.xml | WEB-INF |

| faces-config.xml | WEB-INF |

| persistence.xml | WEB-INF/classes/META-INF |

| log4j.xml | WEB-INF/classes |

JPA 2 - Managing entities and transactions

Normally the EJB container would take care of doing such things as maintaining transactions or providing a valid EntityManager to our code. But we have no EJB container here, we need to do some things ourselves. But I still like to stick as close as I can to the "EJB" way, so I provide here my solution to the problem: the Dba class. It is really quite simple:

package com.yourapp.service;

import javax.persistence.EntityManager;

import javax.persistence.EntityManagerFactory;

import javax.persistence.Persistence;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

/** some ugly code to make transaction management nice and dumb.

*

*/

public class Dba {

private static volatile boolean initialized = false;

private static Boolean lock = new Boolean(true);

private static EntityManagerFactory emf = null;

protected Logger logger = LoggerFactory.getLogger(getClass());

private EntityManager outer;

/**

* open Dba and also start a transaction

*/

public Dba() {

this(false);

}

/**

* open Dba; if readonly no JPA transaction is actually started, meaning you will have no persistence store. You can still persist stuff, but the entities won't become managed.

*/

public Dba(boolean readOnly) {

initialize();

openEm(readOnly);

}

public void openEm(boolean readOnly) {

if (outer != null) {

return;

}

outer = emf.createEntityManager();

if (readOnly == false) {

outer.getTransaction().begin();

}

}

/** Get the outer transaction; an active transaction must already exist

for this to succeed.

*/

public EntityManager getActiveEm(){

if(outer == null){

throw new IllegalStateException("No transaction was active!");

}

return outer;

}

/** Close the entity manager, properly committing or rolling back a transaction if one is still active.

*/

public void closeEm(){

if(outer == null){

return;

}

try{

if(outer.getTransaction().isActive()){

if(outer.getTransaction().getRollbackOnly()){

outer.getTransaction().rollback();

} else {

outer.getTransaction().commit();

}

}

} finally {

outer.close();

outer = null;

}

}

/** Mark the transaction as rollback only, if there is an active transaction to begin with.

*/

public void markRollback(){

if(outer != null){

outer.getTransaction().setRollbackOnly();

}

}

public boolean isRollbackOnly(){

return outer != null && outer.getTransaction().getRollbackOnly();

}

// thread safe way to initialize the entity manager factory.

private void initialize(){

if(initialized){

return;

}

synchronized(lock){

if(initialized){

return;

}

initialized = true;

try{

emf = Persistence.createEntityManagerFactory("testapp");

} catch(Throwable t){

logger.error("Failed to setup persistence unit!", t);

return;

}

}

}

}

This incredibly simple class takes care of two basic things:

- using one time on-demand initialization, it sets up the EntityManagerFactory

- it provides a way to create, obtain and properly close an EntityManager

- the Dba class provides an explicit "readOnly" mode which you should use when you are not going to persist any changes (effectively no JPA transaction is created in readonly mode)

- it provides a way for you to trigger a rollback

The ugly init code makes it pretty much self-sufficient, no need to trigger the setup using something like a context listener or whatever.

The usage of the class is really simple; you simply create an instance and from that moment on you use that one instance to manage a transaction. You may need to use the same transaction in multiple methods (DAO, service, whatever); I manage that by simply passing along the Dba object to the service methods. Careful usage of openEm()/closeEm() and getActiveEm() can prevent mistakes here. Example:

// high level service method, or perhaps even code in a JSF backing bean

public class doThingsAndStuff(){

// open transaction

Dba dba = new Dba();

try{

// createUser 'adopts' the transaction

UserDao.createUser(dba, "Someone", "some1", "Some1");

} finally {

// 100% sure that the transaction and entity manager will be closed

dba.closeEm();

}

}

public class UserDao {

// low level DAO method in UserDao, expecting a transaction to already exist

public static User createUser(Dba dba, String name, String username, String password){

EntityManager em = dba.getActiveEm();

User user = new User(name, username, password);

em.persist(user);

return user;

}

}

The Dba class basically gives you the power that Bean Managed Transactions would give you when using EJB technology. You can start and close transactions at your leasure. To make a method adopt a running transaction, simply pass along the Dba object that is managing the transaction and be sure to use getActiveEm() to be 100% positive that the entity manager/transaction already existed. This is important because this way you can make dumb assumptions in your code; createUser() assumes that some other piece of code is responsible for closing the entity manager part of the Dba object that is passed to it. Now say that you make a mistake and you don't actually start a transaction before calling createUser(); then getActiveEm() will simply fail. Easy peasy.

Want to trigger a rollback? As you can see the closeEm() method provides for that; the responsibility you have is to mark the transaction as rollback only through the appropriate Dba method. The easiest way to do that is at the point where you deal with your exceptions. If that is "above" the layer of code that created the Dba object, you'll have to catch the exception, mark the transaction as rollback only and then rethrow the exception.

To minimize the strain on resources, you should only open a EntityManager transaction when you actually need one; which means you will be making changes that need to be persisted, or rolled back. When you only fetch data from the database, you don't need a transaction and thus you should not open one. Dba provides for this by allowing you to open it in readOnly mode.

public List<User> getAllUsers(){

Dba dba = new Dba(true); // open in readOnly mode

try{

return dba.getActiveEm().createQuery("from User u order by u.name").getResultList();

} finally {

dba.closeEm();

}

}

Almost cannot be made more lazy IMO :) You could change the design of Dba to default to readonly in the no-arg constructor; I leave that up to your own discretion.

If all that seems too complicated to you (or perhaps makes your eyes bleed), I'm sure you can come up with an alternative design that works for you personally. You have to find the balance between flexibility, robustness (which also includes catching mistakes you make) and ease of development. Ease of development is not only about minimizing code - it is also about maximizing the opportunity to catch mistakes. But before you pass judgement, I do ask you that you glance over the adding utility methods to Dba paragraph first.

This is by no means the only possibility you have of working with an EntityManager object in a web environment (for example there are inversion of control / bean injection frameworks such as Spring or Google Guice). But why bother when you can do it with a basic (stateless) application design and simply passing along an object?

Simple = better.

Then you get the discussion of how you would manage controller classes. Take the above example; you can distill from the code that UserDao.createUser is a static method. From your teachers to books to your colleagues, it will probably have been hammered into your head that you should avoid static methods and variables where you can. And those people and books are right, you should.

But this type of code you want to keep as stateless as possible, because stateless code is by definition thread safe, works in load balanced environments and there is no avoiding multithreading when you are doing a web application; there are going to be multiple users hammering on buttons at the same time. So I pose the question: why NOT use static methods in your controller classes? You could create an instance of the controller and then call its methods, but you could simply make the controller methods static and not be bothered with having to create an instance and basically be guaranteed that you are not going to slip in code that keeps state.

It is a very personal thing. I certainly don't mind creating static controllers to save me time and effort (and possible the creation of many short-lived objects). When you look at frameworks such as Play framework, it follows exactly the same design.

Simple = better.

JPA 2 - Beware of nested transactions

Using the Dba class it is quite easy to create multiple entity managers and have transactions running in parallel on the same thread. This is however not as fail-proof as it may seem as you may run into the issue of deadlock. The database you are connecting to, even an in-memory one like HSQL or H2, will likely employ table and/or row locking. When firing off multiple nested transactions you may inadvertently try to persist stuff to the same table at the same time, which may just result in a table lock wreaking havoc.I ran into this puppy myself when using HSQLDB - the thread just hangs when you trigger a table lock. By switching in H2 I finally figured out what was going on (because H2 sets a lock timeout by default).

My remedy: don't use nested transactions. Just don't do it. You want to simplify your code layer; the transaction management should follow suit. Sure open and close the transaction as you see fit, but don't go making it any more difficult than it should be; try to keep one transaction active to one thread. Otherwise you will have to start to deal with the complexities of such an environment.

To maintain this, follow another simple rule: don't start a transaction in your backing bean. Only start (and cleanup) transactions in your controller methods. From there you can easily propagate the entity manager as I've already demonstrated; by simply passing it along as a parameter.

JPA 2 - Adding utility functions to Dba

I find the EntityManager interface a bit... verbose. There is more typing going on than is really necessary. Luckily with our Dba class as the base, we can add some wrapper code around EntityManager and Query to seriously reduce the typing involved.Lets start with Query, which we will wrap in a class called QWrap:

public class QWrap {

private Query q;

public QWrap(Query q){

this.q = q;

}

public QWrap par(String pname, Object v){

q.setParameter(pname, v);

return this;

}

@SuppressWarnings("unchecked")

public <T> T single(Class<T> clazz){

try{

return (T) q.getSingleResult();

} catch(NoResultException nre){

return null;

}

}

@SuppressWarnings("unchecked")

public <T> List<T> multi(Class<T> clazz){

return (List<T>) q.getResultList();

}

}

Nothing too shocking; basically these methods are just short-hand versions of the Query methods you will probably use often. You can apply the same trick to other methods you tend to use. Now we can plug that into the Dba class:

public QWrap query(String q){

if(outer == null){

throw new IllegalStateException("Creating a query when there is no active transaction!");

}

return new QWrap(outer.createQuery(q));

}

With a simple call to query() we will now obtain our QWrap object. Lets see it in action:

Dba dba = new Dba(true);

try{

List<Person> people = dba.query("select p from Person p where p.address.city=:city").par("city", "Washington").multi(Person.class);

} finally {

dba.closeEm();

}

Neat huh? Note the abuse of generics here to basically get around having to do typecasts. But thats not all we can do. With a few more utility methods we can save even more typing. Lets begin by laying down a convention: all our entities shall have an auto incrementing ID column (using whatever strategy your DBMS provides). Using that, we can slap an interface on our entities:

public interface IdAcc {

public long getId();

}

This little interface is going to save us plenty of boilerplate code. You simply apply it to all your entities. With this baby in our toolbox, we can make a save() method that does a persist() or a merge() when required and returns the managed instance (if not in readonly mode):

public <T extends IdAcc> T save(T obj){

if(outer == null){

throw new IllegalStateException("Creating a query when there is no active transaction!");

}

if(obj.getId() > 0L){

return outer.merge(obj);

} else {

outer.persist(obj);

return obj;

}

}

I tend to avoid merge() myself if I can help it, but especially in a web application it can sometimes save plenty of time to just have JSF dump the changed values into an entity object and then merge it. In any case using the save() method you cannot only do that, you can easily create forms which can be reused for adding and updating data. More! Lets define a method to fetch an entity by ID and one to 'refresh' an entity, which is the act of making a detached entity attached again:

public <T> T getById(Class<T> clz, long id){

if(outer == null){

throw new IllegalStateException("No transaction was active!");

}

return outer.find(clz, id);

}

@SuppressWarnings("unchecked")

public <T extends IdAcc> T refresh(T obj){

if(outer == null){

throw new IllegalStateException("No transaction was active!");

}

return (T) outer.find(obj.getClass(), obj.getId());

}

The getById() method isn't too special; its just a thin wrapper around the find method of the EntityManager. The refresh() method is incredibly useful however as you can just pass the entity object to it, and the method does the rest. The typecast in refresh() is necessary because of the added IdAcc interface. Not that you'll notice it is there in your calling code.

User user = dba.getById(User.class, userId);

user = dba.refresh(user);

This is just a little bag of tricks; I'm sure based on the ones I've provided you can add quite a deal more.

JPA 2 - Bootstrapping database data

I already discussed how to bootstrap a prototype web application's database here; I would give that a read if you want more information. For now, lets create a simple class in which we can insert initial data:

public class AppConfig {

private static volatile boolean initialized = false;

private static Boolean lock = new Boolean(true);

protected Logger logger = LoggerFactory.getLogger(getClass());

@SuppressWarnings("unchecked")

public static List<User> getAllUsers(Dba dba){

load();

return dba.getActiveEm().createQuery("select u from User u order by u.name").getResultList();

}

private static void load(){

if(initialized){

return;

}

synchronized(lock){

if(initialized){

return;

}

initialized = true;

Dba dba = new Dba();

try{

EntityManager em = dba.getEm();

// insert all test data here

em.persist(new User("testuser", "test1", "Test1"));

// more init here

logger.info("AppConfig initialized succesfully.");

} catch(Throwable t){

logger.error("Failed to setup persistence unit!", t);

} finally {

dba.closeEm();

}

}

}

}

Of course this code is only necessary when you use an in-memory database for prototyping or testing purposes; if you use a physical database then you don't need the AppConfig class at all.

The load() method, much like the Dba class, does an on-demand one time initialization. Reloading the webapp may cause this to happen a second time though, so you might want to build in a check if data already exists in the database to prevent data from inserted multiple times.

In this prototyping setup, it is your job to make sure that load() is called when it is required. This should happen automatically when you make the AppConfig class responsible for providing important data. User objects are a prime example, basically one of the first things you'll do is login most likely so that will trigger the database to be initialized very early on. You could also trigger it to happen in a context listener; then you don't need to have code in place that ensures it happens.

Note that when load() is invoked for the first time, that will be the moment where JPA is setup and the database is generated. So you won't know if you setup all that stuff correctly until you actually trigger your application to need the database.

JPA 2 - Getting something to deploy

So before we get to deploying the app and seeing if it works, we need some minimal stuff to make things happen. We at least need an entity to see that the database is being built, but we also need some bootstrapping code to get the database logic to initialize.Let me copy a slimmed down version of the User entity from my prototype article:

@Entity

public class User implements Serializable {

@Id

@GeneratedValue

private long id;

private String name;

private String username;

private String password;

public User(){

}

public User(String name, String username, String password, ){

this.name = name;

this.username = username;

this.password = password;

}

// getters and setters here...

}

You can put it in any package you want, the class will be found automatically as soon as we cause the EntityManagerFactory to be created.

Now to get the Dba class to initialize, we need to use it at least once. Lets add an init method to our JSF backing bean to see that happening quickly:

@ManagedBean

@ViewScoped

public class Index {

public Index(){

}

@PostConstruct

public void init(){

// breaking my own rule: starting a transaction in the backing bean. This is only for demonstration purposes however.

Dba dba = new Dba();

try{

// some stupid code that triggers the database to be created

AppConfig.getAllUsers(dba);

} finally {

// cleanup the transaction

dba.closeEm();

}

}

public String getHello(){

return "hello";

}

}

The PostConstruct method is abused here to bootstrap our database, just so that you can see it happening. Like I stated before you don't want to ever see the Dba class being used in a backing bean so some sort of Controller method would normally be called here. But I don't want to litter the article with too many pieces of fake code.

As soon as you deploy the application and start the server, nothing special will happen. But as soon as you navigate to the application's index page, the call to Dba will be triggered which will in turn cause the database to be initialized (you'll notice how tables will be created in the logging) and the data bootstrapping code to be executed.

And that's basically it - setting up a project for JSF 2 and JPA 2 to deploy on Tomcat 7, using Eclipse as the IDE. At this point you'll be ready to write some code. The remainder of this article will deal with annoyances and troubles you may run into from this moment on.

Dealing with multiple environments (dev, acceptance, production)

When you develop an application, at one point you will want to publish it to somewhere that is not your own development computer; be it a testing environment or live production. The tricky part about that is that each environment will require certain settings to be entirely different; most notably the datasource will need to point to a completely different database. Fortunately because we use Tomcat, that is relatively easy to manage and Eclipse has already helped us a bunch too; what you do is utilize the context.xml file. We have ours in our development environment neatly tucked away into that Servers project. This file is not part of our project and so will never ever be deployed with the application to any other server.But as said in the previous section, we don't want to put application specific configuration in the global context.xml file or else we'll have difficulties deploying multiple applications at the same time. Instead tomcat allows us to specify a specific configuration file per-application. You put this file in a slightly difficult to formulate directory inside the tomcat_dir/conf directory; the format is:

tomcat_dir/conf/Catalina/HOSTNAME/WARNAME.xmlSo when deploying on the localhost and the war name is testapp.war, the directory and file would be:

tomcat_dir/conf/Catalina/localhost/testapp.xmlInside this file we can then put the information we put in the context.xml in our development setup:

<Context>

<Resource bla bla bla/>

</Context>

You can try to do this in your development environment, but you'll find that Eclipse will throw away your file again. As I said - it has taken control over your Tomcat installation. Hence my suggestion to put it in the global context.xml file instead.Now for a bit of a fun addition, what if we want to know from within the application code if we are running in a development environment or not? That might sound like a design smell, but I for one tend to allow certain things in a development environment that I do not allow in any other environment to expedite the development process; think of relaxing security measures and such. We can facilitate something like that quite easily by defining a property in the JNDI context which we can interrogate from the code. In the global context.xml file (that file in the Servers project) you can add this inside the context element:

<Environment name="DEPLOYMENT" value="DEV" type="java.lang.String" override="false"/>

And then in the code we can do this:

Context initCtx = new InitialContext();

String env = (String) initCtx.lookup("java:comp/env/DEPLOYMENT");

boolean dev = "DEV".equals(env);

On your test/acceptance/production server you then simply do NOT define this element and the boolean variable will end up as false. Easy!

You could add any properties you want of course; if you want them to be global add them to the context.xml, if you want them to be local to one application only, then add them to the application specific xml file.

Tomcat 7: Dealing with republishing

The Java debugger has a neat hotswap function where code changes can be "uploaded" to a running JVM instance, effectively allowing you to change code of a webapp as you have it open in your browser. Since this is a feature of the JVM debugger, it is only actually activated when you start the server in debug mode from Eclipse. This works up to a certain point of course; you cannot make changes to the class structure for example without a full webapp reload being needed.However when you try to make a change to a class or resource while deploying to Tomcat, you may run into the problem that the entire web application reloads (but only if you did not follow my instructions so far)! Not only is that highly annoying and time consuming, but it will quickly make you run into the dreaded "out of permgen space" problem (for which there may be some steps to attack it; check the comments of this article). This problem was especially apparent for me as I bundled Richfaces when starting out with this article; two redeploys is all it took before I had to reboot the server.

Fortunately, this has a very easy fix; by simply telling Tomcat it should not automatically reload the application. This is that "reloadable" property I told you to change all the way back in the section titled fixing the deployment settings. At this point if you still had it on simply disable it, republish the server and try again; you'll find that the webapp no longer redeploys, but the changes you make, even changes to for example a JSF backing bean method, are still automatically visible. Don't forget to hit reload in the browser of course ;)

Eclipse: Web resources not hot-deploying?

I ran into the issue where using this hot-deployment scheme, classes were being properly redeployed but web resources in for example src/main/webapp or src/main/resources were not, even though those directories was part of the deployment assembly! After struggling with it for a long time I figured out that I screwed up the hot-deployment settings; I had set the server publishing settings to never publish automatically; by default that should be automatically publish when resources change. Check that Eclipse is allowed to publish. When you make a change, don't forget to set the reloadable property of the web application to false as described above.Final thoughts: adapt to your needs

Of course JSF is only an example; you can substitute any web framework you want. You could yank out Primefaces and use vanilla JSF or another addon framework (Icefaces, Richfaces). What I wanted to do was create an environment that is very close to an enterprise application but without all the added weight to it. You don't need an application server, you don't need an EJB layer, you don't need an application built out of multiple tiers, layers, boilerplate code and other madness that generally makes Ruby or PHP scripters snigger at you behind your back.Simple = better. Also with Java as the base it can be simple... with some major effort to get it to that point. Always those dreaded configuration files, we don't seem to be able to ever be rid of them no matter how hard people try. I hope this article can help you wade through the boring setup stuff and get you to the juicy meaty side of web development; actually creating something and seeing it appear in your browser!